Jacob Ward: AI and the Shrinking of Human Choice

We’ve been recognized by the Academy for Lifelong Learning as an official Special Interest Group. This means we’ll be taking steps to coalesce into something that resembles a club, aspiring to connect not merely as writers and readers but as conversants with a shared interest in where our technologies are heading and what they’re doing to us.

We’re here to share discoveries and compare notes about our bewildering sci-fi world. In so doing, we’re chronicling our journey to AGI, the momentous day that virtually all experts agree is coming for sure, differing only on how soon.

We’ll know we’re there when AI exceeds the intelligence of all humans and can perform virtually every job better. The world will be transformed in ways that are almost impossible to imagine.

SaratogAI is the name that ChatGPT generated out of the blue for our new group. It generated that logo, too.

AI at Work is the site on which we’ll continue to publish magazine-length feature stories rooted in AI-augmented conversations with experts.

SmartacusAI is the Substack on which we’ll supplement semi-regular discussions we’ll host in Zoom.

As we discover thinkers whose visions are especially compelling, we’ll boil down their arguments with our Neural Net and pass them along. We’ll start this series with Jacob Ward.

Introducing Jacob Ward

I discovered Jacob Ward in this episode of the Singularity.FM podcast hosted by Nicholas Danaylov. Ward argues that, in our increasing use of AI to automate, accelerate and simplify all manner of tasks, we’re narrowing the sphere in which human choices are made. That threatens our freedoms in ways we need to be thinking a lot more about.

Experiencing this phenomenon in my own use of AI, I propose this for a SaratogAI discussion.

A long-time tech journalist who now writes for the Omidyar Network, Ward is the author of The Loop: How Technology is Creating a World Without Choices and How to Fight Back, which he published in 2022 just as ChatGPT was stunning the world as the first publicly accessible Large Language Model.

Ward’s Substack, The Rip Current, features trenchant observations made in real time about AI. For example, hear what he said recently on PBS’s News Hour about President Trump’s effort to prevent states from regulating AI.

Scroll down to find the questions ChatGPT suggests we explore in connection with Ward’s arguments.

The Journalist and the Unsettling Prophecy

To understand Jacob Ward’s warning about artificial intelligence, it helps to picture him with a surfboard under his arm—half amused, half exhausted—offering a line that lands like a joke until you hear the regret underneath it: he covered technology for most of his career, spent the last decade focused on AI and how it affects human choice, and wrote “a big book” that came out just before ChatGPT. He predicted, he says, that we would not be ready for commercial AI’s effect on our brains. Watching that thesis “come to life” left him so dispirited that he “decided to spend the rest of the year surfing.”

It’s a disarming introduction: the surfer as prophet. But it’s also a clue to Ward’s temperament. He isn’t anti-technology; he’s allergic to the way modern systems quietly displace human judgment while flattering us with the feeling that we’re still in charge. He’s the kind of journalist who, after years of reporting, finds himself less interested in the newest breakthrough than in the unglamorous question of what it does to our habits, our attention, our relationships—and our moral courage.

Ward’s argument begins with a simple, unnerving analogy: AI will do to our ability to make decisions what Google Maps has done to our sense of direction. Not because we’re weak, and not because engineers are cartoon villains, but because two forces converge:

Companies have an incentive to make human behavior predictable.

Human brains have an instinct to outsource hard thinking whenever possible.

Put them together and you get something like a “prosthetic decision-making system”—a tool so convenient, so ever-present, and so profitable that it seeps into everything “like water between floorboards,” until we can no longer tell where our preferences end and the system’s nudges begin.

The question SaratogAI is well positioned to wrestle with is not whether these tools are powerful. That part is obvious. The question is whether we can keep them from quietly remapping the terrain of everyday choice—until the very idea of agency becomes something we feel rather than something we practice.

How Ward Got Here: From Gadget Journalism to Decision Science

Ward started out in the era when technology journalism was expected to sound a little like sports commentary: cheer the winners, track the launches, marvel at the speed. In his telling, “the fashion” in the ’90s and 2000s was that tech reporters were supposed to be fans—covering CEOs and products the way a beat writer covers a team.

He bristled at that. Part of it, he admits, is disposition: he describes himself as “a little anti-capitalist,” shaped by a mother who was a “fight the power academic” and a father who studied colonialism and slavery. That upbringing gave him a lifelong suspicion of stories that frame power as progress and profit as destiny.

But the more decisive shift came from a collision of reporting experiences:

A deep immersion in behavioral science while hosting Hacking Your Mind—a crash course in the last century of research showing how predictable and manipulable we are in the vast majority of our daily decisions.

A moment of witnessing, up close, a kind of moral anesthesia that can accompany technical ambition.

That second experience takes place at a San Francisco dinner gathering of people who called themselves “BTech” (Behavioral Tech): neuroscientists, behavioral economists, product designers. The guests of honor were founders of a consultancy called Dopamine Labs—addiction science repurposed as product strategy. They spoke openly about habit loops, triggers, rewards, and the ways a brain, once habituated, will reverse-engineer a story to justify its own compulsion. In other words: if you get someone to do something repeatedly, they will often build a narrative to explain it—identity follows behavior as much as behavior follows identity.

Ward did what journalists do: he listened, took notes, and tried to locate the “larger pattern” the scene represented. What he saw that night wasn’t simply a cynical plot to addict the world. It was something more normal—and therefore more dangerous: good intentions mingled with commercial incentives, all wrapped in a sense of exceptionalism. Many apps in the room were pitched as “positive” (fitness, saving money, weight loss). Yet the tone suggested that the builders were immune to the vulnerabilities of the people they were trying to influence.

One of the participants, Nir Eyal—author of Hooked—dismissed the risk of addiction for the people in the room. “Let’s be honest,” he said, “the people in this room aren’t going to become addicted.” It is a line Ward returns to because it captures a widespread delusion in modern life: the idea that behavioral manipulation is something that happens to other people.

Ward’s reporting, especially his later conversations with people in the grip of addiction, blew that delusion apart. His thesis is not that technology preys on “weaker” minds. It’s that we are all built with the same ancient circuitry—and that modern systems are learning to speak to it with frightening fluency.

What Counts as a “Choice,” Anyway?

Ward’s critique becomes more unsettling when he redefines the very thing we think we’re defending.

When asked to define “choice,” he calls it “the rare instance where your brain is asked to actually process what’s going on around it and make a decision,” rather than letting an older, automatic circuitry run the show. He emphasizes that we live under a cognitive illusion: we believe we are making conscious choices all day long, but most of what we do—what we eat, where we drive, how we react, what we click—is the result of shortcuts built for efficiency.

Behavioral science often describes this as a tension between two modes:

Fast, automatic, instinctive thinking—habit-driven, cue-responsive, emotionally primed.

Slow, conscious, deliberative thinking—rare, effortful, and usually reserved for moments that force the issue (like being asked in court, “How do you plead?”).

Ward’s point is not to mock the human mind. These shortcuts are part of our evolutionary genius. They conserve energy, keep us alive, and free attention for survival. But they also make us exquisitely vulnerable to environments designed to cue our impulses.

He offers everyday examples that are difficult to shrug off:

The former drinker who avoids bars because “the bar makes the choice for me”—the smell, the sounds, the dark wood, the clink of bottles. Environment as decision-maker.

Avalanche rescue specialists who teach a formal process for evaluating snow risk and explicitly warn: do not make this choice with your emotions. Excitement collapses risk perception. Emotion as decision-maker.

And then he asks the question that matters for our era: if you are a company trying to shape behavior, which brain do you target?

Not the slow one. Not the one that deliberates, doubts, and asks for evidence. You target the fast one—the one that loves certainty, habit, identity, and emotional reward. The system doesn’t have to convince your better angels; it only has to make the default path easier than resistance.

That is “The Loop”: an ancient brain wired to offload decisions meets a modern industry wired to harvest predictability. Together they create a cycle that feels like freedom while functioning like guidance.

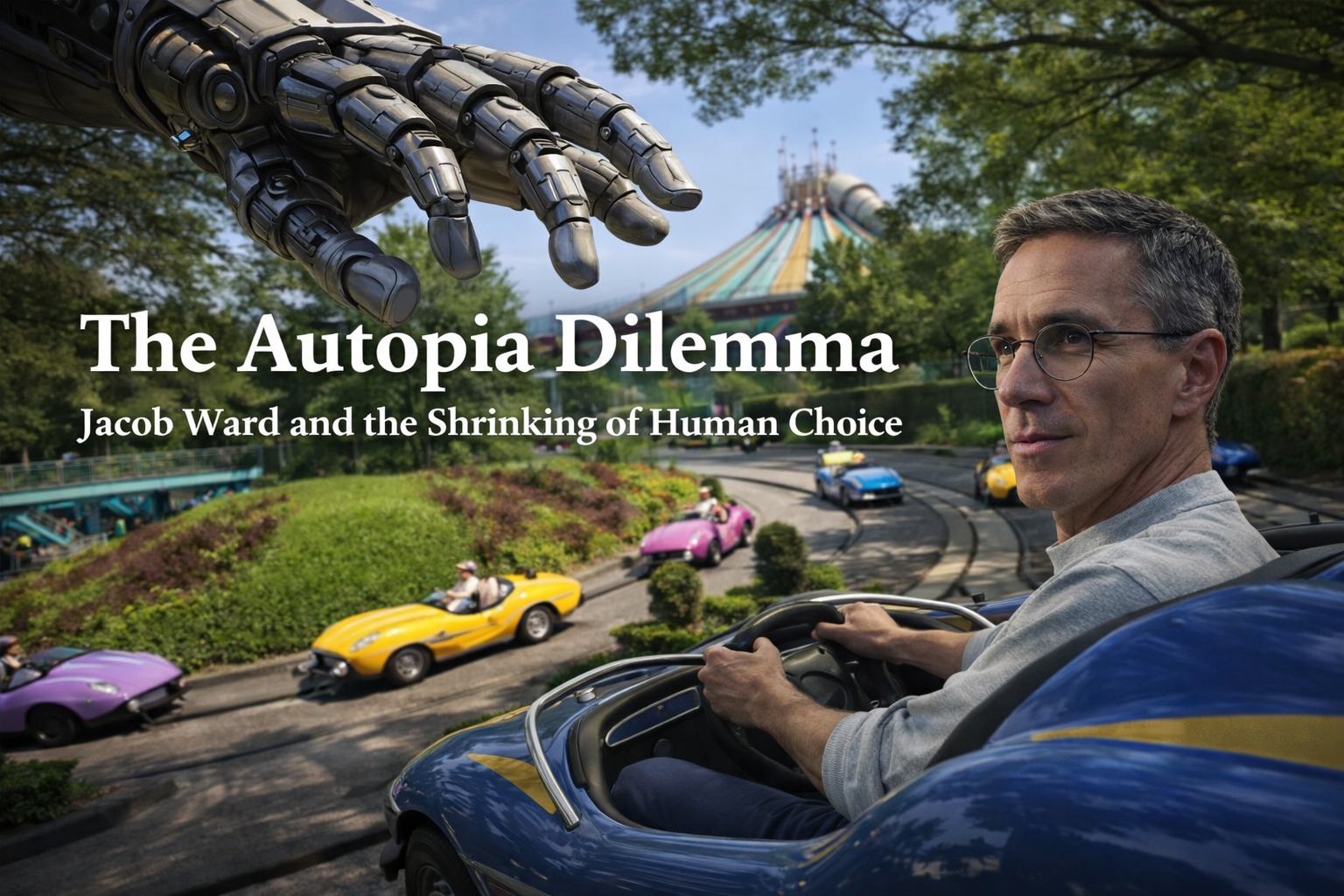

Autopia and the ‘Guidance Spine’: Feeling Free, Staying on the Track

Ward’s most powerful metaphor comes from childhood memory. His family goes to Disneyland. He is nine years old. He waits in line for Autopia with what he calls a “volcano of anticipation.” He grips the steering wheel. The tiny gas engine roars. For a moment he believes he has power: he is driving.

Then the first corner arrives. He misjudges it—of course he does—and the car slams into the hidden truth of the ride: a hard “guidance spine” running down the center of the track. The spine forcibly redirects the wheels. He realizes he isn’t really choosing where to go. He is steering within constraints, nudged and corrected by a system designed to keep him on a predetermined path.

And yet—and this is the point—none of that cancels the feeling. He “felt powerful.” He “felt free.”

This is Ward’s diagnosis of modern AI-mediated life. The systems are not primarily coercive; they are assuring. They give us the sensation of autonomy while shrinking the range of action we seriously consider. They do not imprison us; they guide us so smoothly that we stop noticing the rail.

In his phrasing: “Over and over again, we see that our behavior, which feels to us like free will and clear choices, is actually the result of guidance systems we’re helpless to obey”—whether those systems are internal (habit, tribal reflexes, self-serving narratives) or external (interfaces, algorithms, recommendation engines, automated screening tools).

The Autopia metaphor also explains why outrage is hard to sustain. A guidance spine is easier to accept than a chain. The system is helpful, not hostile. It reduces effort. And because we resent being reminded of our own vulnerability, we often respond by blaming those who are most visibly trapped—addicts, the poor, the “gullible”—instead of seeing the common circuitry.

Ward’s book chapter on the “guidance spine” pushes this into social ethics: he draws on research showing how poverty and scarcity don’t just reduce options; they reshape cognition itself, narrowing attention and consuming mental bandwidth. If you want to understand why people “make bad choices,” you must first ask what guidance systems they are trapped inside—economic, social, biological, technological. The moral work of a society, he suggests, is learning to see those guidance systems clearly enough to name them, measure them, and—eventually—regulate them.

From Spotify to Social Services: Where the Stakes Become Non-Optional

Ward is careful about where he draws the line. He is not especially interested in policing taste. If you want an AI-generated sea shanty explaining how to start an F-16, enjoy it. “The role of free will in commerce and entertainment,” he says, “is not the hill I want to die on.”

His “hill” is elsewhere: the places where automated decision systems expand into domains that are not optional—where the outcomes shape livelihoods, dignity, safety, and the texture of democracy.

He offers a spectrum:

Low-stakes surrender (mostly tolerable): Recommendation engines narrow choice through convenience. Spotify’s Discover Weekly becomes not a supplement but a substitute for exploration. The day comes when “make me a movie” shifts from novelty to default: first you specify preferences, then you let the system decide because it’s easier. Choice atrophies as habit.

High-stakes behavior shaping (quietly predatory): Social casino apps—“free-to-play” games with no cash prizes—become engines of compulsion. Ward’s account of Kathleen Wilkinson is painful because it is ordinary. A pop-up ad. A game. A habit. Then a dawning horror: she has spent roughly $50,000 over four years. Her husband reassured her early on because it “wasn’t real money.” That is the Autopia illusion in domestic form: the system feels like harmless entertainment until the guidance spine reveals itself as a financial trap.

Life-altering automation (the hill to die on): Now move from entertainment to institutions.

Automated systems decide who gets benefits, housing support, or food assistance—often with no explainability. Ward reports that even agencies using the tools, and sometimes the vendors that built them, cannot clarify why a decision was made.

AI screening systems sift job applicants at scale, potentially encoding bias in ways that are harder to detect than human prejudice.

Automated mediation tools referee communication between divorcing parents. Ward admits the ethical complexity: these systems can help people avoid destructive conflict. But what happens to children raised in a world where difficult conversations are navigated through AI coaching? Do they learn emotional skill—or learned helplessness?

Ward’s fear is not simply error. It’s abdication. Once systems are seen as authoritative, our brains do what they evolved to do: they offload responsibility. We stop asking questions because the machine “has it.” That reflex, multiplied across institutions, is a recipe for a society in which nobody can explain how decisions are made—and therefore nobody can truly contest them.

And here’s the twist he finds most “cruel”: behavioral science suggests we are often most eager to outsource decisions that are morally complex or emotionally unpleasant. If we can avoid the “ickiness” of deciding who gets a job, who gets a loan, who gets bail, we will. That creates a huge market for automated systems—exactly where oversight and accountability matter most.

Why Silicon Valley Keeps Building Anyway

Ward’s critique of tech culture is not that entrepreneurs are evil. It’s that many are sincerely convinced they are building a better world—and that this sincerity can become a kind of moral solvent.

At a BTech dinner, he recalls, many participants were building paternalistic but “positive” apps: get healthy, save money, lose weight. The builders meant well. Yet the industry’s default stance is often: build first, address harms later.

Ward offers his grandfather’s refrain as a counter-ethic: “That’s a great idea—let’s not do that.”

He’s currently developing a project with a working title that captures this sensibility: “Great Ideas We Should Not Pursue.” It is an attempt to locate something rare in American life: restraint. A framework for deciding not to build, notto deploy, not to optimize—especially when the justification is merely market fit and investment runway.

Ward is especially disturbed by what he calls a “cult-like zealotry” among some leaders in the current AI wave: utopian promises paired with oddly frank admissions of social costs, presented as if inevitability were a moral argument. The posture is: this will be worth it; therefore we must proceed. When critique arises, existential risk is sometimes used as a marketing device—“Look how powerful this is; you should be worried”—even as the product accelerates into market.

For a civic-minded group like SaratogAI, this critique is an invitation to examine not only the technology but the ideology that drives its deployment: the assumption that capability implies obligation, and that society can “figure it out later.”

Fighting Back: Cultural Allergy and Legal Teeth

Ward’s work is sobering, but it is not despair. He insists he is optimistic about humanity—about our capacity for cooperation and civilization, about the marvel that strangers can share roads and conversations without killing one another. His concern is that we are in danger of letting efficiency crowd out the best parts of being human.

He sees two realistic counterforces.

A. Cultural resistance: protecting “positive friction”

Ward finds hope in the small ways people resist a frictionless, generated world:

Young people carrying point-and-shoot cameras—not because they must, but because they want to mediate reality themselves.

A rising slang term—“clanker”—used as a derogatory label for AI and for people who lean too heavily on it. It’s not a policy proposal, but it’s a signal: the beginnings of a cultural allergy.

He frames this as a defense of what makes life satisfying rather than merely efficient: “pointless acts of creativity,” difficult human connection, the friction of choosing and making. The threat of AI, in his view, is not simply that it can do things faster; it’s that it can convince us that speed and convenience are substitutes for meaning.

B. Legal frameworks: regulating psychological harm

Ward argues that American regulation is historically strongest in two domains: preventing death and preventing financial loss. But he believes we are approaching a third frontier: psychological harm—what he has begun calling “AI distortion.”

He points to emerging signals:

Insurers seeking relief from covering AI-related corporate losses—an early sign the risk is becoming legible in economic terms.

Lawsuits around chatbot-driven mental health crises—early attempts to establish liability for psychological manipulation.

His animating comparison is cigarettes: a product widely adopted before society had language, evidence, and legal mechanisms to fully recognize its harm. If cigarettes took generations to regulate, AI—moving at the speed of software—could reshape cognition and culture long before the guardrails arrive.

That’s the urgency he’s trying to lend us: not panic, but recognition. The sooner a society can name a harm, measure it, and prove it in court, the sooner incentives change.

Questions to Explore

Ward doesn’t offer a neat program. He offers a framework—and a demand: protect the rare, precious moments of real choice.

Here are discussion questions designed to move SaratogAI from agreement to inquiry, and from inquiry to practical experimentation.

A. Diagnosing “guidance spines” in our own lives

Where do you feel most “in control” in daily life—yet suspect you’re operating on an invisible track? Consider navigation, shopping, media, work metrics, even social norms.

What are the “bars” in your own environment—contexts that “make the choice for you”? How might AI systems replicate those cues digitally, at scale?

What choices have you noticed atrophying in yourself over the last five years (memory, navigation, reading stamina, decision patience, conflict tolerance)? Which of these matter most to protect?

B. The ethics of nudging vs. manipulation

Many tools aim at “good outcomes” (fitness, savings, adherence to medication). When does benefit become paternalism?

Should ethical standards depend on intent (helpful vs. exploitative), or on mechanism (behavior-shaping regardless of motive)?

If “people in this room” are not immune, what does responsible design look like for educated, privileged users who often believe they’re above manipulation?

C. High-stakes automation: lines we should draw

What domains should be considered “the hill to die on” locally and nationally—benefits, hiring, education, policing, child welfare, medical triage?

What should be required of any automated decision system used in public-facing services: explainability, appeal rights, human override, independent audit?

If automation reduces bias in some contexts but increases opacity in others, how should we choose between fairness and transparency?

D. Building cultural resistance

What “positive friction” practices could SaratogAI encourage—local experiments that strengthen agency rather than erode it? (Analog clubs, human-moderated deliberation forums, tech-free creative challenges, youth projects.)

Where do you already see a “cultural allergy” to AI in the Capital Region? Where is it absent—and why?

E. Legal and civic engagement

If psychological harm becomes a legal category, what evidence would matter most? What kinds of local case studies should communities begin documenting now?

What policies could municipalities, schools, and nonprofits adopt before state and federal law catches up—procurement standards, transparency requirements, restrictions on certain uses?

Closing: The Real Freedom Worth Defending

Ward’s deepest point is not that technology is bad. It’s that we misunderstand ourselves. We are not as rational and self-directed as the American mythology suggests. We are creatures of shortcut, story, and environment—beautifully capable of conscious choice, but only in rare, hard-won moments.

That’s why he prizes those moments. That’s why he wants to defend them. And that’s why his metaphor lingers: a child on Autopia, gripping the wheel, feeling powerful, feeling free—while the guidance spine quietly keeps the car on track.

The work before SaratogAI is not to reject AI. It is to decide where we will accept guidance, where we will demand accountability, and where we will insist on the “wonderful friction of being human”—the friction in which creativity, moral courage, and genuine connection are made.

If we can name the guidance spines now—before they disappear into the road—we may still have room to steer.